When the internet became mainstream it had a huge effect on how organizations and teams worked and collaborated. The whole digital software product space came into existence at the time. AI is an equally big new influence affecting teams today, and the role of Product Leadership has to evolve again.

There are two main perspectives to take in analyzing what this means for our role as a product leader:

- Teams are heavily utilizing GenAI tools to get their work done.

=> What does the use of AI tools in our teams mean for performance, outcomes, cost, collaboration, etc…? How might it create revenue opportunities or efficiencies? - Teams build AI products or parts of their product experience augmented with AI.

=> What is there to know about successfully building AI product experiences? How will distribution and buying behaviors change and how can we get ready for this?

For both use cases, the role of leadership is shifting and evolving. I’ve been talking to many product leaders about this in the past weeks and months and there are some themes emerging.

The HOW in product leadership is affected more than the WHY

There is general agreement among product leaders today, that the majority of the ways HOW we go about creating product outcomes are evolving and changing. The HOW is changing the most. With that, how we lead, mentor, coach and manage our product teams has to adapt and change as well.

There also are product first principles that stay intact and become even more important to teach and coach. In other words: the WHY behind our product job functions is much more stable and can serve as a source of orientation and stability in teams that experience a lot of change and uncertainty.

What stays stable:

There are foundational functions (aka jobs) in our product leadership roles that stay stable. They tend to be at the role/job or product first principle level. Product leaders will e.g.:

- still be accountable for hiring, team composition, team topologies and developing the skills of the team

- stay responsible for value to users AND the business, and collaborate with designers on usability and engineers on feasibility

- be expected to keep shipping fast and often,

- stay accountable for making sure direction is set, makes sense and gets aligned and clarified, this includes setting and aligning vision, strategy and goals,

- be held accountable for business outcomes and results,

- need an ability to think in ecosystems and complex causalities while creating focus and clarity around strategy, roadmaps and goals, etc…

=> As product leaders we must ensure we coach and mentor teams so that they understand these principles and develop the skills to work according to them. What Petra Wille so nicely coined as being responsible for creating the “shipyard” that allows for great product work.

What is evolving:

Almost all parts of HOW we execute our leadership roles are evolving, as the way we go about them can be redesigned, augmented or partially taken on by GenAI tools. There is a lot of debate about how much. It’s fair to assume, that almost every job we do in product gets affected somehow. We are expected to focus on creating efficiencies where possible, we can also radically rethink how some of the work we do would be approached fully leaning into the core strengths of AI. The opportunity here is to become equipped with new skills, more efficient and faster. At the same time we need to ensure and watch that people have good judgement, skill and the right level of experience to understand where and how to keep a human in the loop in AI processes or AI product experiences.

=> As product leaders we need to find these opportunities and efficiencies and ensure they don’t negatively affect trust or mental load in our teams or with our customers.

What is radically changing or new:

There are parts of our roles that are radically changing and related to that there are entirely new leadership responsibilities.

- Finding ways to radically reimagine and rethink our work by leaning on the transformative new skills of AI: e.g. pattern finding in large amounts of data, fast analysis of large amounts of data, training models with custom and proprietary data (on site to not share the data externally!)

- Finding automation use cases: Anything that has to do with data, analysis of large amounts of data, knowledge management or repetitive tasks becomes important to structure in a way that makes the most use of ML and AI technology.

- Understanding when a predictive process is ok to use vs. when a deterministic solution is better

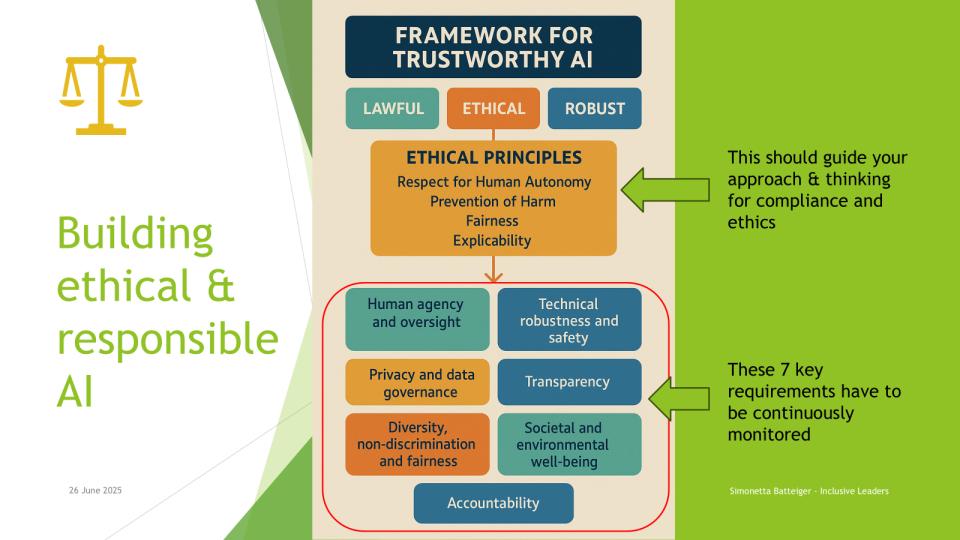

- Knowing and applying principles of trustworthy AI experiences. This prevents undesired outcomes and keeps products compliant with evolving regulations around the world.

- Knowing in broad strokes what it entails to set up the underlying data and ML Ops infrastructure to maintain, evaluate, monitor and adjust model operation. This becomes an important factor in understanding the business case of AI solution approaches vs. deterministic solution approaches.

=> As Product leaders our job becomes how to create with AI in a way that is ethical, focused on our strategic goals, customer value and commercial results. We will continue to have to guide and coach these skills in our teams.

Skills gaining in importance:

As Product Leaders it has always been our job to be accountable for setting and clarifying direction (or in other words defining what success means) and for setting our teams up to be capable to deliver the right outcomes that materialize that success. Both strategically as well as financially for our customers and organization.

The following are skills that will gain in importance in ensuring the success of our teams. If you are a product leader, pick one of these themes with your team and start intentionally growing it:

- Critical thinking: teams need to be capable to judge whether the output from a model or any kind of agentic workflow makes sense. This takes experience, skill and reflection. Having a human in the loop is necessary for most GenAI generated content as well as for any process that is happening in changing and complex contexts.

There is simply no such thing as a non-hallucinating GenAI (only strategies to minimize it). And there is also no such thing as intelligence in anything a GenAI model creates. It is important that team members understand that all outcomes are the result of predictive statistics without comprehension of context. The context is what we as humans must know and add to not inadvertently create very poor, unethical or risky decisions or communications. - Understanding product success principles and success metrics: As there is model drift on any ML model that gets updated with new input data, your ability to write good evals ensures you catch these as they happen. You cannot phrase sufficiently good evals for your agentic workflows if you don’t understand the product principles and success metrics that are relevant in your context. It’s not writing evals for the sake of them. It is about truly understanding how to think about both success as well as unwanted outcomes.

- Clarity on Direction and Strategy: Successful automation, process optimization or agentic flows need measurable outcome goals. Else you start optimizing for something that might not even make strategic sense. One of the biggest blocks for organizations to execute strategic direction has always been misaligned or missing strategy supporting systems. Put that on steroids behind misaligned AI assisted processes and the problem gets worse not better. Clarity of direction and a team capable to spot, address and resolve potentially misaligned systems in order to create aligned ones will set great teams apart. As a product leader it makes sense to invest time into training your team on this skill.

- Commercial skills: Everything we do in product including all automation also has to make business sense. As product leaders we must understand the token cost and maintenance cost of our agents and models. Every model drifts. There is no such thing as “being done” with an AI product. It requires constant monitoring, adjusting and maintenance, and those data scientists and ML engineers are not cheap. Understanding the most effective and efficient way to solve something might be a deterministic rule set that you can actually 100% rely upon. It carries much smaller maintenance cost.

- Intentional Trust building. Trust is the hidden factor in almost any successful venture humans build together. Without trust, there is no trustworthy (and therefor compliant, ethical or responsible) AI. Without trust there is also no psychological safety and without that no high performing teams. Without trust your brands suffer and your customers might turn from promoters into demoters.

New skills to develop:

There are new skills any team that works with AI, creates agentic flows or builds machine learning models must master. Understanding what makes sense to automate, building trustworthy and ethical AI experiences, and finding effective ways to deal with incredibly high levels of uncertainty (and the resulting stress, anxiety and mental health issues) are a part of this.

What is good to automate?

Understanding what kind of tasks can be automated vs. which ones should be done or supervised by humans is becoming a skill we need to be able to mentor, coach and evolve with our teams.

Tasks that lend themselves to automation are ideally clearly defined and follow a predictable process. They have clear (and stable!) input and output criteria you can judge them by. The sensemaking and decision making framework Cynefin can be really useful here.

You should be able to automate most tasks that are clear or complicated. The solution to these task can be known (in your organization or outside of it). The instruction for it can be given and simply followed to avoid mistakes.

=> they’re great for agentic automation.

Tasks that are complex or chaotic will keep requiring a human in the loop and might honestly be better done by a human in the first place. These kind of tasks have no clear cause – result relationship and you need to rely on experimentation and failing forward to achieve results.

You can think of everything that sits in the complex or chaotic parts of the Cynefin model.

The solution to these tasks is not yet known. It takes ideas rooted in talent, experience and intuition to set up useful experimentation to explore them. These tasks fall in domains were our knowledge and experience don’t 100% apply from one context to another. Or where even executing the same thing (like a strategy process or an employer branding campaign don’t simply translate from one year to another)

=> This is where the experienced human PM is needed and where a focused “human in the loop” is absolutely necessary, even if you automate parts of the task.

Dealing with uncertainty

Burnout rates are extremely high in product teams at the moment. Uncertainty is also extremely high right now. High volatility, uncertainty, complexity and ambiguity (VUCA) are regular characteristics of our context as product leaders (and individual contributors).

The feeling team members are describing is one of “the pressure is rising” and uncertainty is increasing. This creates a lot of stress, a constant need for adaptation, and a growing longing for stability or control in teams.

At the same time we know that we do our best work when we are relaxed, calm, joyful and trusting. So maybe one of the best areas for product leaders to focus on right now is making experimenting with AI a playful, joyful and creative part of the work. While keeping a keen eye on trust levels in the team. If trust erodes, we will slow down and threaten the performance of our teams. If you want to read a book about that, I highly recommend “The speed of trust” by Stephen Covey.

Building trustworthy AI

Building trustworthy AI is rooted in a clarity about Values and Ethics. This means your team has a mental model for, and knows how to build trustworthy AI experiences.

As a product leader this means operationalizing ethical concepts like human autonomy, prevention of harm, fairness, explicability. It also means to continuously monitor and ensure human agency and oversight, technical robustness and safety, diversity and non-discrimination, privacy and data governance, transparency, accountability, and societal and environmental wellbeing into your processes and products.

This is not only important for compliance, but for the creation of a just and safe world for all humans to live in.

=> The underlying principal skill is: operationalizing desirable values and outcomes.

In practice this requires understanding your input data sources, conversations about ethics, building in safeguards and continuously monitoring the outputs of your models and agentic flows for compliance with your values and ethical requirements.

I’ve written more about this here. Including developing an open source canvas of tools that help you operationalize ethical AI building with your product team. Just message me for the canvas or download it from the linked article.

Conclusion – Actionable Summary for Product Leaders

As GenAI transforms how we build products, as product leaders we must also evolve how we lead. Core responsibilities remain, but execution is changing fast. Focus on coaching your teams in critical thinking, ethical AI use, and clear success metrics, while ensuring automation efforts align with strategy and don’t erode trust.

Prioritize clarity, human judgment in complex areas, and a culture of psychological safety. Leading through this shift means balancing innovation with responsibility; anchored in strong product principles and intentional, trust-centered leadership. Here is an Action Plan Template that might serve as a learning and growth agenda with your team.

Header Photo by Igor Omilaev on Unsplash

___________________________

If you would like to explore this more: reach out for a free coaching session with me.

I coach, speak, do workshops and blog about #leadership, #product leadership, #AIEthics #innovation, the #importance of creating a culture of belonging and how to succeed with your #hybrid or #remote teams.

Get my latest blog posts delivered directly to your inbox.

Your email address will only be used for receiving emails with my latest blog posts in them. You can unsubscribe at any point in time.

If you enjoyed reading this post, you may enjoy the following posts as well:

The RoI of AI initiatives and the realistic cost of AI readiness

We live in this funny world, where for a few quarters we currently get to play with AI use cases without having to show the RoI of our efforts just yet. But AI will not change the rules of business at a fundamental level. Profitability, EBITDA, revenue growth and cost efficiency will continue to matter…

Your first 90 days in your new product leadership role

Congrats! You have just been promoted, or you were hired into a new product leadership role. That’s super exciting, and also the best time to make an intentional plan for how to succeed in it. The first three months count in setting you up for success (or learning fast, that this particular role is not…

The core Jobs To Be Done for a product leader

Whenever I coach a newly promoted product leader one of the first questions they ask is what the job of a product leader even is. They often feel very insecure about their new responsibilities and lost as to where to get started and whose input to trust. Product Leaders are often advised to develop a…

Finance skills for product people

Every executive team is focused on the financials. They care about revenue and their organization’s financial results. Understanding how to relate the value of what we do in product storytelling to business results and money is a key factor in getting teams funded and being trusted with our roadmap priorities. Ideally, product initiatives make sense…

So you want a high performing product team?

Many years ago, I started pondering this question. What makes a high performing product team? What can I learn about this and how do I apply my findings in practice? The theory is pretty simple: Build something both customers and your organization value, do it with a great team. But the practice of this is…

How to think and talk about business impact

About 8 years ago I had just started in a new product role. I wanted to get to know my key stakeholders and understand this organization’s business. I was lucky that the CFO and me both worked in the same office, physically close to each other. I wanted to understand what kind of business case…