If you are like most people, this topic may seem daunting. It can feel complex, difficult and overwhelming to think about ethics. Or it could be fun, creative and super fulfilling. My aim is to make the case for the latter framing with this blog post and invite you to explore this aspect of product management with lots of curiosity, agency, power and courage. I believe that thinking about ethics holds quite a bit of the key for joy and fun at work!

Why am I saying that ethics are fun?

Because it’s a super creative and fulfilling experience to see something you created have a positive impact in the world. When I work with my coaching clients, and it comes down to what people phrase as fulfilling visions for themselves, it most often revolves around leaving a positive impact in the world. Doing something good that is greater than themselves. And feeling happy about seeing how their actions and choices made life better for themselves and people around them.

And isn’t that at the core of building great products, too? We want to solve real problems for our users and clients. We want to make life easier for them and get paid for the positive contribution our solutions make in the world. Ethics has a direct relationship with building products that people love and the personal fulfillment you get out of your job.

What products do people love?

People love products that are adding to their sense of wellbeing, that are entertaining, that are joyful, that are beautiful and most importantly useful for them. People tend to love products more that add a sense of ease and joy to their live compared to products that only make a problematic issue less cumbersome. The latter feels like something that merely gets you back to feeling ok. The first makes you actually experience a sense of happiness, joy, excitement and aliveness. Something all of us would love to have more of in our lives.

What does this sense of joy have to do with ethics you might ask…

To answer that it might help to take a look at a few definitions of what ethics are and how we might translate this into questions that guide your product thinking:

Ethics is the discipline concerned with what is morally good and bad and morally right and wrong. (Britannica)

I might therefore ask myself: What kind of world am I building?

Ethics is a set of moral principles or a guiding philosophy (Merriam Webster)

I can ask: What are my values?

Ethics is concerned with distinguising between good and evil in the world, between right and wrong human actions, and between virtuous and nonvirtuous characteristics of people (Dictionary.com)

I can ask: What is the best version of myself?

Ethics is a set of concepts and principles that guide us in determining what behavior helps or harms sentient creatures (Wikipedia)

I can ask: What behaviors do I invite for?

When you put your idea of a positive contribution to the world, your values, your idea of the best version of yourself (or humankind) and the most positive behavior you might invite for at the core of building products, you are much more likely to build something people actually love. And at the same time, it will inform and guide your thinking about the impact you do not want to create. Starting from there you can literally encode your vision for the world you want to create into your product.

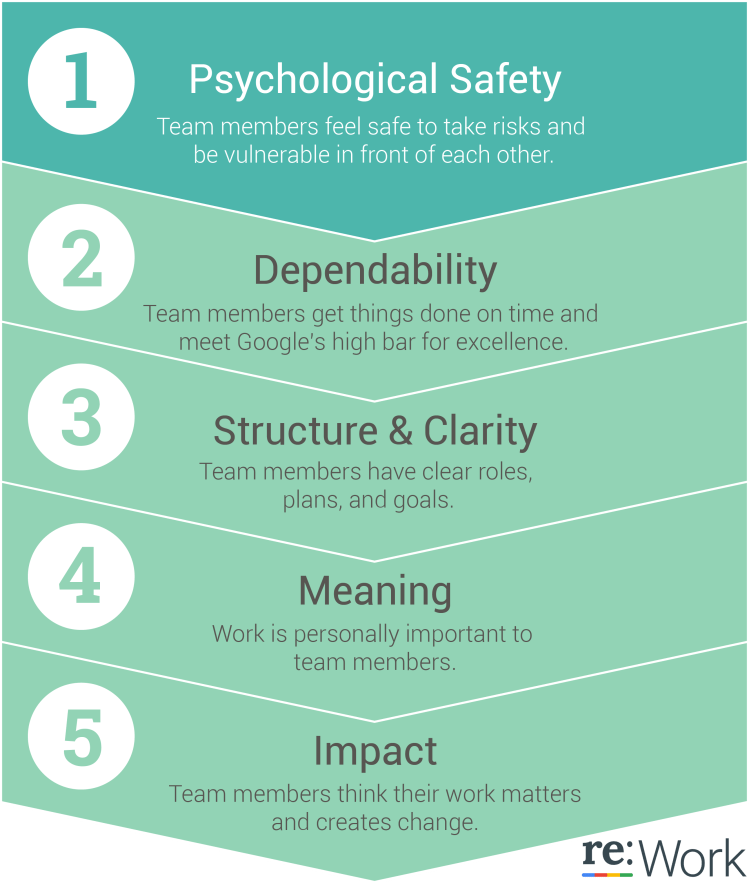

You will also much more likely build your products in a team that enjoys working together and is generally happier. Building something you believe in and that aligns to your team’s values, directly pays into the meaning and impact pillars of high performing teams. The image below describes the five attributes high performing teams have in common according to research done at Google. More fulfilling work contributes to happier teams, and happier teams will in the end be more successful.

So how do you practically do this you might wonder?

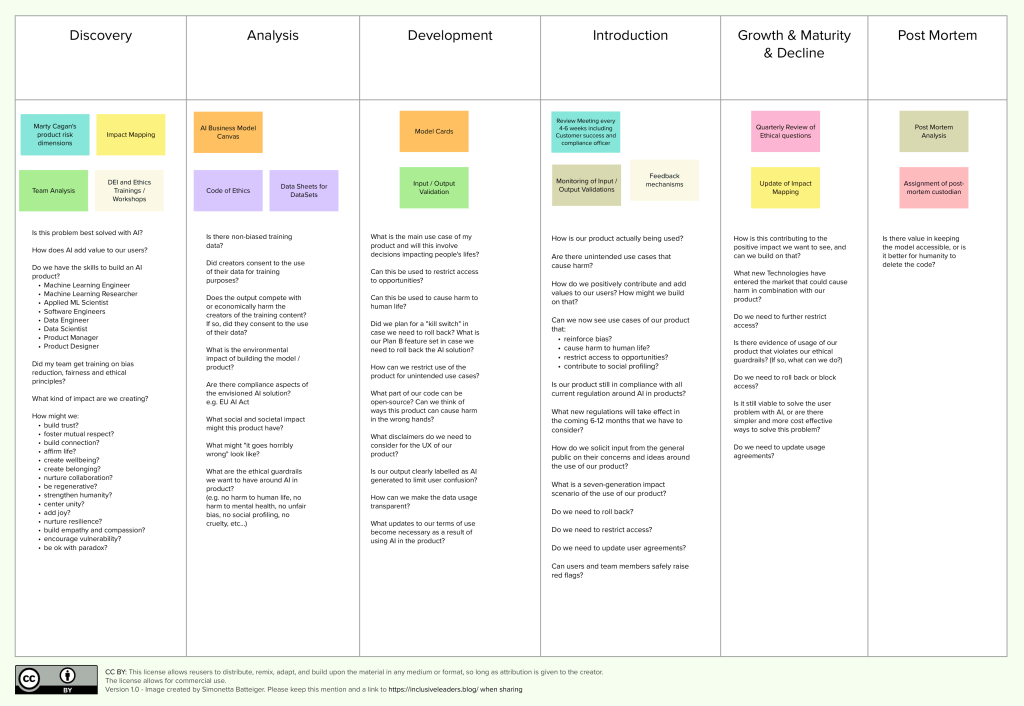

There are many ways in which we can make ethical considerations (the world we want to build, the values we want to center, the behaviors we want to invite, the best version of humanity we want to contribute to) part of our product work. The most important work around this is to be clear about what kind of impact we want to create, what values (or product principles) guide our thinking and then being systematic and intentional about encoding, monitoring and evaluating these principles as part of the product work we do. The image below gives an idea of the kind of workshops and artefacts you might want to create and some of the questions you might want to ask yourself at what part of the product lifecycle:

You can download and use this canvas with giving credit to Inclusive Leaders at any time.

For all of these workshop ideas and resources, there are great templates that can get you started without having to re-invent the wheel. I will make further resources available in the coming weeks. If you have ideas or links to great tools, please comment or get in touch with me through LinkedIn.

Who should be part of the team considering ethics for your products?

As everything in product, these ethical questions are also something you best discuss, align and build on as a team. To do this well you’ll need to find a way to incorporate the voice and impact you have on your users, your team’s vision and values, a compliance perspective and your data, design and engineering lead’s insights. This requires thinking beyond the perspectives you personally hold (consider the impact your products may have in different cultures or for demographics different from you needs. You’ll need a thoughtful strategy to inquire, assess and monitor these.). There are compliance questions resulting from regulations that are being drafted in the EU and the US at this point (and will likely be adopted in other parts of the world as well!), so make sure you partner with your compliance roles on this. You are most likely going to stay compliant when you build your products to be fair and trustworthy and intentional about their positive impact on sentient beings. Whatever format you choose, think about the positive contribution you want to make and how you can limit the risks your product might pose for humankind and our planet. We each have one life and share one planet between all of us.

What might a starting point of values look like that I can discuss with my team?

Personally I would center the following values in my products (and honestly in life!):

| trust | respect | connection |

| wellbeing | belonging | collaboration |

| regeneration | unity | joy |

| resilience | empathy | compassion |

| authenticity | generosity | balance |

| beauty | usefulness | viability |

| inclusion | equality | fairness |

| safety | privacy | human in the loop |

| transparency | beneficial | consent |

| honesty | creativity | abundance |

| kindness | empowerment | diversity |

If you are centering these values in your solutions, you will likely create a positive impact on the world and create products that are useful, fair and contributing to the realization of human preferences, opportunities and potential.

Akshay Kore is using the following ethical principle as a way to think about the risks of building AI products:

A system that is not trustworthy is not useful.

Ensure your system is trustworty (e.g. explainabiliy, tranparency, non-biased)

Ensure your system is safe.

Ensure your system is beneficial.

(Source: Designing Human-Centric AI Experiences, Akshay Kore, Apress)

So how might working with these values look in practice?

Let’s take an example. Let’s look at honesty and transparency. When these two things matter to you, you might focus on explainability of your model, the creation of data sheets, the creation of model cards, the clear labelling of outputs that are AI generated, vs. outputs that are human in the loop, vs. outputs that are genuinely human. Consider your chatbot for example, is it powered by a human, or is it powered by an AI? Does it present pre-scripted and reviewed responses? Or is there a chance that it makes responses up? Can you clearly distinguish the source of the response? What labelling is in place? It it clear to the user what they are getting? Or does it mislead the user into thinking they are dealing with a human? Is it clear to the user what happens with the data they feed into the bot? You have a lot of influence in your design and UX choices to create transparency and trust here, or to open yourself up to being viewed as non-trustworty, misleading or even negligent (if your chatbot e.g. is delivering personal advice and gets a user to form emotional bonds while having no understanding of a moral compass or mental health issues).

Let’s take a second example with looking at fairness, equality and inclusion. When these values matter to you, you might think about how fair, equal and inclusive behaviors look like and build products that nurture those. For example: Your product might focus on equal speaking time in meetings: You might measure it, then nudge and encourage those who speak little to contribute more, while helping those who speak a lot to listen more and give space to their peers. Or you might consciously use algorithms that focus on mitigating bias in training data, mitigate bias in classifiers and mitigate bias in predictions. As a result you might present output predictions to users that utilizing more inclusive language and role model and promote the use of inclusive language. After all, the output from your model might become the input for the next one and serve as inspiration for the users of your product.

Where is the opportunity here?

This brings me back to my original thesis that thinking about ethics in building products with AI can be fun, creative and super fulfilling. The opportunity here is to use fair and trustworthy models and to intentionally design the user experience of your products to create the world you’d like to live in. Based on principles of human interaction that you would see as the best version of ourselves. Making it more likely e.g. that our world becomes a more fair, united, thriving and collaborative place.

Considering ethics also allows you to be thoughtful, intentional and deliberate around the risks you want to safeguard against, avoid and monitor. After all you and your team want to feel great about the positive impact your products are having in the world, not lose sleep at night over the unintended negative impact you might create. Or create a compliance nightmare or PR disaster for your organization.

How do I get started?

Everything in life starts with a first step. Ideally one that you take in an intentional direction. The initial list of values and their ranking, plus identifying the patterns you want to avoid (e.g. reinforcing bias, social profiling, reinforcing privilege, negative impact on physical or mental health or users, compliance risks) are best explored in a dedicated workshop. I’m happy to help you design one if you need help with this.

My suggestion would then be: include timeboxes for checking your list of values against the impact you actually create into your standard ways of working. This could live in your strategy sessions (e.g. creation and review of values, impact mapping, AI business model canvas, etc..), be part of the checks you hold against your OKRs, be part of test plans and automatic monitoring, come to life in a question you ask yourself in a retrospective, be part of your triage process of incoming user complaints, or be part of what you evaluate in user experience research conversations. You as a product manager could set yourself a monthly reminder to spend half an hour to intentionally focus on asking yourself if your values and impact still align. And then identify an intervention when necessary. And you can follow thought leaders in this field on LinkedIn or through their blogs. People like Timnit Gebru, Ana Chubinidze, Akshay Kore, Gary Marcus or the Center for the Advancement of Trustworthy AI.

AI – as any tool – has the potential for creating harm as well as the potential to positively shape our world. You as teams building these kinds of products are in the driver’s seat of creating that impact. That is truly exciting and has been part of what made product work fun for me for a long time. Building good products, that align with your values feels fulfilling, joyful and fun! Thinking about ethical questions and aligning your work with the results makes it much more likely that you enjoy what you do at work.

Title Photo by Markus Spiske on Unsplash

___________________________

If you would like to explore this more: reach out for a free discovery session with me.

I coach, speak, do workshops and blog about #InclusiveLeadership, #Product Leadership, #innovation, the #importance of creating a culture of belonging and how to succeed with your #hybrid or #remote teams.

Get my latest blog posts delivered directly to your inbox.

Your email address will only be used for receiving emails with my latest blog posts in them. You can unsubscribe at any point in time.

If you enjoyed reading this post, you may enjoy the following posts as well:

Leading Product Teams Through the AI Fog: A practical approach

One of the things product leaders enjoy the most about their roles is to invest into the personal growth of their teams. A task that has become a new kind of challenge in a world where AI seems to have taken over what it means to work, create and lead in product. How might we…

The RoI of AI initiatives and the realistic cost of AI readiness

We live in this funny world, where for a few quarters we currently get to play with AI use cases without having to show the RoI of our efforts just yet. But AI will not change the rules of business at a fundamental level. Profitability, EBITDA, revenue growth and cost efficiency will continue to matter…

Your first 90 days in your new product leadership role

Congrats! You have just been promoted, or you were hired into a new product leadership role. That’s super exciting, and also the best time to make an intentional plan for how to succeed in it. The first three months count in setting you up for success (or learning fast, that this particular role is not…

The core Jobs To Be Done for a product leader

Whenever I coach a newly promoted product leader one of the first questions they ask is what the job of a product leader even is. They often feel very insecure about their new responsibilities and lost as to where to get started and whose input to trust. Product Leaders are often advised to develop a…

How Product Leadership must evolve in the age of AI

When the internet became mainstream it had a huge effect on how organizations and teams worked and collaborated. The whole digital software product space came into existence at the time. AI is an equally big new influence affecting teams today, and the role of Product Leadership has to evolve again. There are two main perspectives…

Finance skills for product people

Every executive team is focused on the financials. They care about revenue and their organization’s financial results. Understanding how to relate the value of what we do in product storytelling to business results and money is a key factor in getting teams funded and being trusted with our roadmap priorities. Ideally, product initiatives make sense…

17 thoughts on “How to practically get started with thinking about ethics in AI products?”